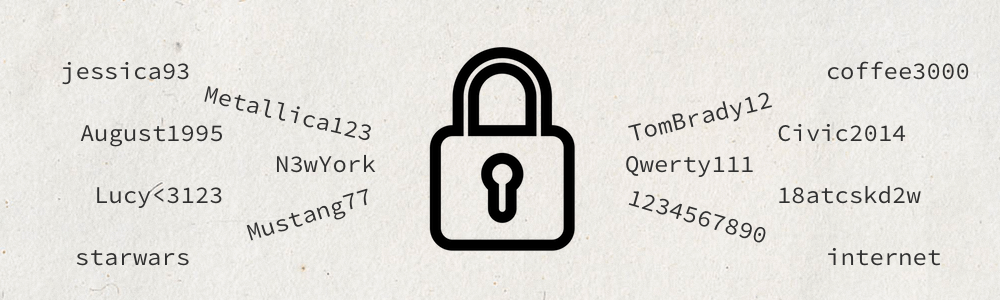

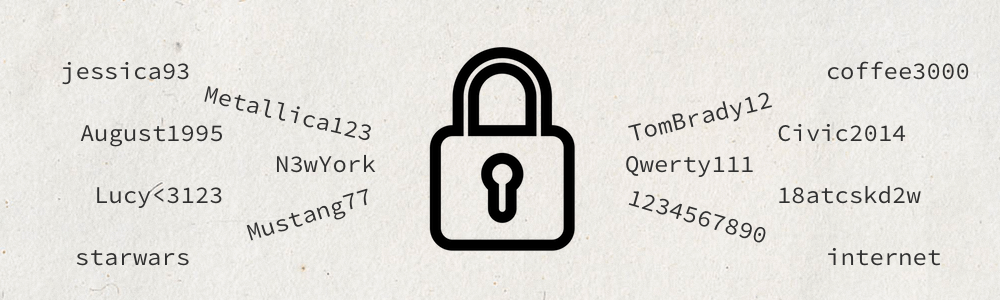

I've noticed a trend when working on my web security side projects. I was looking through passwords used in leaked databases (you can find a couple here) and I found that a lot of passwords follow common patterns. A large amount of them are based off of things people see or use in their daily lives or based off their personal information.

For example, a huge number of passwords in the rockyou word list are someone's name, and then a random number appended to the end. Other common patterns I've seen are

This is interesting for brute forcing, because if someone were conducting a targeted attack, that person should be able to find a lot of this information about their target on-line. If the attacker were to collate this info, and generate a word list using common formats from that information, then they'd have a much higher chance of success using brute force than they would using common word lists.

Append some numbers to any of these, maybe change an "e" to a "3", and you've got a very common password. For example, if we know that our target's name is Bob and his wife's name is Jill, than we'd use something like

bob

bobjill

bobji11

bob1

jill1

...

bob9999

jill9999

Using jargon or common terms from the target's profession is also a common tactic, as shown by this blog post.

I wrote a word-list generator in D that uses this concept. It takes things like names, dates of birth, and favorite bands and appends common strings to the end of them and even concatenates the given phrases together. The results are then written to a word-list file for brute forcing. The code and instructions can be found here.

Man, I'm just going to gush about D for a second here.

Because of the way ranges are designed, I've managed to remove almost all memory allocations on the heap in the code. When generating a word list with 9,342 guesses for a total of 80kB, I was able to bring allocations down to less than 3,000 bytes.

How was I able to do this? Well, as an example, how often do you see code that takes a bunch of substrings, concatenates them together, and then returns the string to a function which does something else to it? Here's some code to illustrate

import std.stdio;

import std.string;

void main()

{

string result;

string name = chomp(readln()); // target first name

result ~= name ~ "\n";

result ~= name ~ "zxcvbnm\n";

result ~= name ~ "qwerty\n";

auto f = File("name.txt");

f.write(result);

}

There are a lot of unnecessary allocations in this code. Instead of writing to a temporary to hold our file data, why not just write directly to the file? Also, we can use D's ranges in order to remove concatenations of substrings:

import std.stdio;

import std.range;

import std.algorithm.iteration;

void main()

{

auto f = File("name.txt");

auto output = f.lockingTextWriter();

auto user_input = readln();

auto name = user_input.filter!(a => !a.isWhite);

output.put(chain(

name, "\n",

name, "zxcvbnm\n",

name, "qwerty\n"

));

}

Here, we're using string literals with chain to create a lazy range over

already existing memory. As our code iterates over the chain, the chain

struct handles giving the next character of each given substring.

Our user input has whitespace characters filtered out thanks to filter,

and our affixes are stored in static memory, so we have allocated no new memory

when we combine them with chain.

This range is then written directly into the file using the OutputRange interface of

lockingTextWriter, which is a common interface which allows any range to be

written to it via a call to lockingTextWriter.put.

The only memory allocates on the heap in this function is the new string returned

from readln().

Now, because we're working with ranges of characters, lockingTextWriter must necessarily

write one character at a time to the file. In some performance benchmarks I wrote,

I have seen no noticeable speed difference between writing

to a buffer and then writing the entire thing at once, vs writing a character at

a time.

Even type conversions can be non-allocating! Instead of allocating a new string

to convert from an int, instead create a lazy range of characters:

foreach (i; 1 .. 1_000)

output.put(chain(name, toChars(i), "\n"));

toChars provides a character range view over the int on the stack. So rather

than needing to allocate a new string for the 500 ints, the already existing

memory stack space and some extra for the toChars struct is all we need.

All in all, I'm very happy to see that D's range concept has practical benefits for projects other than code simplification.